Platform Teams: Reducing My Own Bad Decisions

How actual data reduces self-sabotage in platform team efforts

Co-Author: David Gourley, Co-Founder and CTO - Jellyfish

The inspiration for this edition of the CloudedVision Newsletter came from running into Jellyfish working with a customer. A CIO asked if we had worked together before as Jellyfish works to help CIOs understand their spending and what that spend produces. Since HashiCorp is interested in optimizing workflow, it seemed only natural to see if we could better understand automated workflow's effects on a business with better measures. The more we talked, the more we realized the discussion might impact more folks than the two or three of us, so we wrote a few thoughts for the general public.

With that, enjoy the latest CloudedVision Newsletter!

Introduction

There is a massive focus on centralizing cloud operations through this mystical organization that the industry calls the “platform team.” This organization is more complicated to lock down than many because it takes many forms and names. The CCOE (Cloud Center of Excellence), the Cloud Community of Practice, the Public Cloud Acceleration Team, the Dev/Ops team, etc. While all of these terms vie for attention in this domain, they all point to some form of centralization of best practices to improve onboarding, reduce cognitive load (the amount of information one person must retain to be effective), and drive faster, cost-effective deployment patterns.

For this write-up, we will simplify the domain of rhetoric to the “platform team” as a bit of a catch-all. We’ve published other works that dig into team structures considering the trade-offs between different forms, but for now, let’s define the platform team (PT for short, as we will use it often) as the organization responsible for centralizing and automating core IT services (infrastructure, storage, networking, cloud services, SaaS, PaaS, etc.) to improve engineering throughput and quality throughout an organization.

Many vendors target that team as a buying center in response to this organic shift in the enterprise. After all, if I want to sell a product to someone hoping for global adoption, the platform team is the best place to land. However, if this organization becomes a buying center, vendors quickly realize they are not selling into individual use cases but into a system response to a problem domain. This applies pressure to the vendor and platform team during a sales cycle. The platform team MUST consider the systemic impact of your tooling. It cannot only evaluate it against the two line-of-business use cases you are ideally suited for. No, if the Platform Team truly considers their global remit, tools and workflows are gauged against system-wide goals. Thus, technologies that apply to a small group of applications may be a tech “vended” by the PT automation efforts but should not be managed (or sold?) to the PT. While this topic can wander into interesting territory as we consider the market and customer implications, it suffices that tools and workflow at the PT level should consider broad implications (cost, speed, risk, compliance, legal, etc.).

This style of remit puts pressure on decisions. A change to a workflow used by 100 applications at a bank has a significant impact over one within a single application’s CI/CD pipeline. This means that platform teams must build in such a way as to forgive mistakes by being cleanly fungible, agile, and as independent of underlying tech bias as possible. The PT is not focused on tech as much as they are on process/workflow. How do we improve our decision-making from a systemic point of view to avoid our ignorance and stupidity? I’m talking about me here. You are brilliant and understand all the possible pitfalls of every decision you have made, but I can only dream of knowing the future. So, I need a way to mitigate my bad decisions with measures concerning system-wide decision-making if I hope to see a less bumpy move to a platform team.

We hope to help provide some perspective on how we might enhance qualitative measures (like developer experience measures - NPS, for example), with quantitative measures (where does our money go and what does it produce?) to equip platform teams with a better understanding of the decisions they are making.

Industry Response

The tech industry recognizes this deficit and comes at it from a thousand directions. Ticketing systems show measures of ticket times, DORA metrics, observability tools, log aggregation products, and more seek to provide telemetry around how work moves through your digital supply chain. While the metrics are often helpful, the implementation generally requires an all-in commitment to rationalize all signals. While your Git vendor throws off telemetry, you also have data coming from Jira or similar.

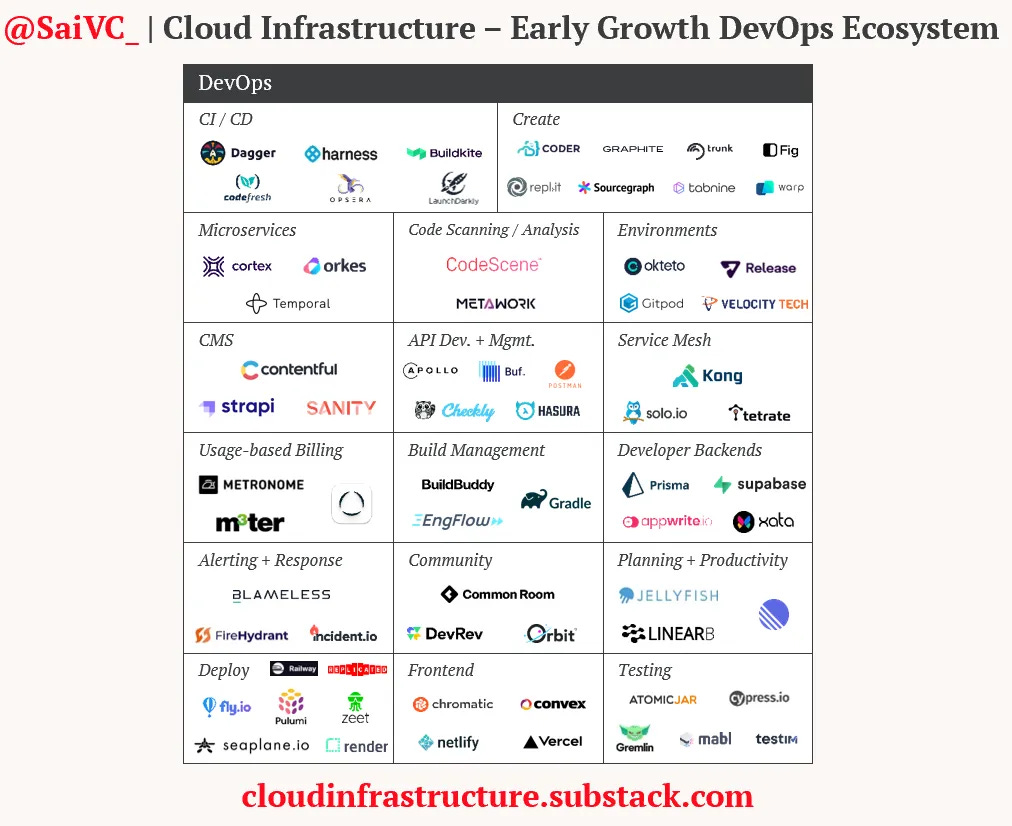

Sai Senthilkumar from Redpoint Investing published a substack looking at the space in and around Dev/Ops observability to rationalize this space. What you will see in his write-up is a broad space of tools designed to provide information out of telemetry data.

While these tools provide some form of usable measures, the PT is challenged with something a bit higher level. Yes, we need to know the health and well-being of systems, but the PT is driven by metrics that help them understand if they are making a systemic difference in IT delivery. Are we shortening the software supply chain or making it more complex? Are we limiting our ability to deliver by introducing human approvals? How are my investments (in people and tools) paying off (or not) at the PT level?

As such, the “planning and productivity” category above becomes very interesting as we look to improve application delivery system-wide.

Briefly - Cognitive Load

HashiCorp reduces the cognitive load from a developer/operator standpoint (covered in various other Clouded Vision blogs), while our partners at Jellyfish examine the domain of cognitive load through a relevancy and alignment lens. Does this investment align with the business? Does it yield a worthwhile product at a reasonable cost (in dollars, resources, and time)?

The idea is that developers can improve tooling, and teams can improve processes as meticulously as possible. Still, if they lack alignment with the business, it may not matter. Speed, efficiency, and quality (all important vectors) can't make up for poor prioritization or a misdirected plan. As the Talking Heads said, “It puts you on a ‘Road to Nowhere.’”

The point is that constructing the right thing is more important than building “fast.” “Fast” is undoubtedly essential when I am incentivized against time and budget, but the “right” thing produces, and the “wrong” thing is waste. Developers are continually challenged by the thrash of conflicting business and product priorities and how they deal with the stress of threading that needle. The common knowledge is that when everything is a priority, nothing is a priority, and advice isn’t always heeded in the noise of uncertainty.

In the development world, we often refer to reducing the strain on cognitive load as “limiting work in progress (WIP).” When WIP is limited or reduced, we generally see accelerated flow along development pipelines and shorter waiting periods between different life cycle parts (expressed as shorter lead times, cycle times, review times, etc.). That’s because engineers are allowed more significant focus on fewer things. By improving throughput with automation, developers experience decreased context switching, allowing for better planning and enhanced creativity/innovation. Limiting WIP results in improved developer outcomes, which brings many benefits, like improved talent acquisition and retention! Developers love to work where they can see their hard work hit production fast. While Jellyfish seeks to identify where WIP bottlenecks are, HashiCorp seeks to automate WIP away (as much as possible).

So, the challenge remains: we need more transparency and higher fidelity data that informs us how our stated priorities align with reality, whether those priorities are worth the investments we’ve made, and how good we are at achieving those priorities.

Data and Emotion

Humans (and AI, it turns out) are pushed towards error by bias, which emerges from multiple directions. Elements that drive us to lousy decision-making include:

Our corporate incentives (as I discuss on the amazing Andreas Spanner’s podcast). Those incentives can drive us not to see the big picture but also to work at cross purposes, causing inefficiency. However, we include our organizational bias in our decision-making. Consider Conway’s Law as an example. Without going down the rabbit hole, these are the rules of our game. Improper rules will drive an AI to make catastrophic decisions. Humans may be able to overcome improper rules, but machines play it straight (though sometimes in unexpected ways). Incentives and organizational structure are critical.

Our prior decisions. Once we have decided something is “true,” we ignore evidence to the contrary (a.k.a. Confirmation Bias). This becomes a challenge at all levels of the organization—the more robust the investment, the more robust our commitment to it.

We don’t like change. Change in and of itself causes us emotional discomfort.

In IT, we use specific measures to eliminate emotional bias. Some intend to be purely quantitative, such as A-B feature testing, ticket time, or performance data. Others are more sentiment analysis or qualitative, like Net Promoter Score (NPS)-style surveys. Both efforts have their limits.

In the quantitative analysis, we see the impact in the micro. For example, a click-stream analysis informs us that a new feature is better received than the previous one based on page hits, service attach rates, or similar. However, the CIO may be looking at more of a macro-analysis. Investment in these teams yields more significant company revenue. Investment in these teams yields risk reduction. Macro measures become more opaque as we try to aggregate micro measures and account for gaps between the micros. In our click-stream example, did that new feature produce more revenue or cannibalize a different revenue stream, netting no/negative revenue impact? Unless I can see the macro, the dev team declares victory and moves on.

Qualitative measures are just that: qualitative. When tracking things like developer experience through survey results, we must remain cognizant of the emotional response to change. When we ask someone to do a job differently, expect satisfaction to dip, then return to normal (with a temporary bump if your automation improves the user’s life). However, the “joy” factor is short-lived. Very quickly, there is a new “normal,” so your DevEx sentiment returns to your “normal” before the change. Measures like sentiment are useful, in this case, in determining where you are on adoption but generally are not as helpful as to the desired outcome. If sentiment has returned to our “normal” range, then we may surmise the practice is now accepted and part of the general operating practice of the team, for example.

What we need is an approach that incorporates both elements. Sentiment to see where we are on the adoption of our new automation (new = dip in sentiment, excited = realized benefit, average = adopted), and then quantitative evaluation that shows company impact (mean-time-to-deliver improved by “x” percent). This combination helps guide positive growth.

Systemic Improvement Requires Systemic Measures

The trend we are responding to at Jellyfish directly addresses this demand for accurate data to support better decisions. Recent analysis of thousands of software engineers and their organizations shows that a new normal of constantly shifting priorities has resulted in poor data hygiene and an overall decrease in visibility into types and priority of work being done at the leadership level - to nearly 25%. The downstream effects? Teams lose alignment with leadership and the business and ultimately lack the resources to do the job and deliver value to customers. In such a world, what does your speed matter? You are misaligned, and value is not delivered, so money is wasted.

The same research states that among the biggest challenges of the past year for software development teams has been a need for clarity and resources for priorities - 32% of teams citing this as their most critical need to achieve goals moving forward.

The need and demand for data to better understand resource needs, processes, and team dynamics is clear. Still, interpretation of that data will ultimately decide the winners and losers here. We know that a confluence of both quantitative and qualitative data will guide the most robust systemic improvements.

Every CIO has a team and a limited amount of time. The challenge is to drive the most significant business impact from engineering efforts. Jellyfish helps to align these micro and macro measures and empowers a data-driven engineering organization to better invest in high-priority projects, reduce unplanned work, and increase the throughput of engineering effort.

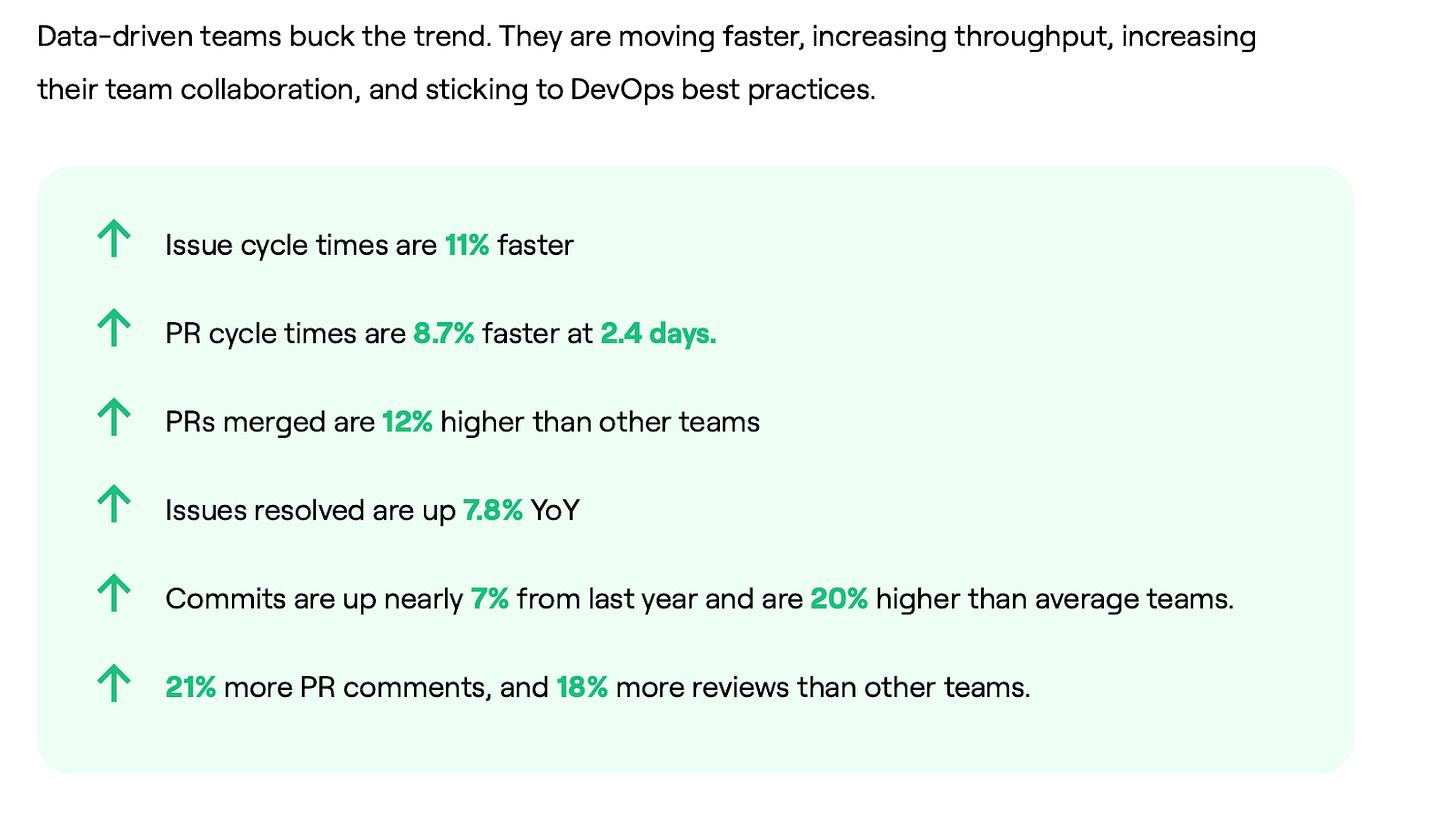

In 2023, teams using an Engineering Management Platform (EMP) and a data-driven approach:

Devote 47% of their efforts to growth and innovation work (31% more than their non-EMP counterparts).

Increase visibility into what work is being done by 46%.

Deliver more software faster.

Source: Jellyfish State of Engineering Management report (2023)

By combining transparency and data, companies gain a deeper understanding of the unique workflows that comprise their SDLC processes. This demystifies R&D motions to their business stakeholders and unlocks a more strategic conversation about the relative tradeoffs of projects and resources.

At HashiCorp, we practice a mantra of “workflow over technology.” Progressively, we are driven to help platform teams achieve systemic improvement to delivery, not just in terms of speed but improved risk disposition and cost. However, it is common to find that some of the changes we introduce shift costs from one place to another or require pre-paying in automation upfront to reduce downstream operations costs. While we see tremendous successes with our customers, we find gaps in our understanding of the broader impact of our automation work at scale. As we work with folks like the brilliant teams at Jellyfish, we hope to understand the macro impact of the automation and workflow efforts that drive Platform Team value. If we automate the delivery of “Landing Zones” in a cloud vendor, we will improve some costs and governance. However, if Mean-time-to-deliver (idea to production) remains flat, we must have missed a bottleneck elsewhere in the software delivery chain (maybe networking?). How do we find that bottleneck? We cannot rely on purely human perceptions. Those feelings are essential but are much more helpful when balanced against quantitative data.

Conclusion

Beyond technical proficiency and cost awareness, we need to be more precise on what dollars are good dollars to spend vs. wasteful. In IT, we are inundated with an ocean of possibilities for improvement (in the Army, we called it a “target-rich environment”). How do we prioritize and choose where the dollars should flow? Our teams have many ideas and are emotionally committed to many paths. However, a clear view of what dollars already produce value and how new dollars could improve our outcomes will help mitigate our lousy decision-making. Does having good data mean we will stop making bad decisions? Certainly not. But it will help us recognize where we are producing waste and reduce the time to create a new opportunity.

As we consider the central role of the platform team, consider carefully how you measure and incent the team to drive optimization. Without clear goals and measures, these teams likely fall to picking favorite technologies rather than the most effective ones.